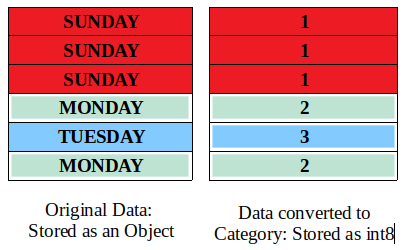

## Processing Large Data with Pandas ### By Evelyn J. Boettcher #### DiDacTex, LLC Py Ohio 2022 <br/> ### Link to Repo [https://github.com/ejboettcher/evelyn_j_boettcher_cv](https://github.com/ejboettcher/evelyn_j_boettcher_cv/tree/main/_pandas) --- ## Motivation Data sets can get large quickly. You can quickly go from looking at a few 100 rows and a handful of columns to a million rows and hundred of columns. <br/> ### If you find yourself saying, #### "My data is BIG data, so I neeed a better computer." Well this talk is for you. I will show how python pandas library with smart use of categories can allow you to read in large data on a laptop. Luckily, we live in a time where the processing power on a laptops would have made the engineers who put a man on the moon; swoon. --- ## Large Data: Big data has been around for a long time. ### Attributes of Large Data * Access controlled * Shows what it shows * <b>Grows</b> --- ## Access controlled: planet datasets In the 1600, Johannes Kepler used Tycho Brahe large dataset on planet observations to prove how planets orbit the sun. <br/> + <!-- .element: class="fragment" --> Unfortunately, Kepler had to wait until Tycho Brahe died before he could get a hold of a large data set. <br/> + <!-- .element: class="fragment" --> Many thought he played a hand in Brahe's death --- ## Large Data: Shows what it shows You always had to take time to understand it. ### Eclipse of IO In 1676 Ole Roemer (Rømer) armed with only paper, pencil, telescope and a mechanical watch, calculated the speed of LIGHT! Was within 30% simply by looking at Jupiter's moon Io. ### Prior to this people thought that light was instantaneous! His prediction for Io was off by a few minutes. --- ## Measuring the Speed of Light This required LOTS of observations over years and years by multiple people. Romer was calculating when Io would have an eclipse and not the speed of light; so he must have had to recalculate he calculations over and over again by hand to ensure there was not a mistake in the math or observations. <br/> ### Roemer's Conclusion + <!-- .element: class="fragment" --> My work is correct, <br/> + <!-- .element: class="fragment" --> the timing is off because light moves at a finite speed. --- ## Our Large Data We have it a bit easier, we only having to deal with: * NaNs: Not A Number * Slow downloads speeds We still have to deal with * Access control * Easter eggs in the data * Data growth <br/> ### But, you say, #### "My data is BIG (larger than my computer's working memory) and I want an answer today and not wait a decade." ### Lets talk about the Python library: Pandas. --- ## Python Pandas Python's Pandas is a high performance, easy to use library for analyzing structured data: csv files, json, SQLite etc. <br/> Pandas is fast, powerful and flexible. It enables you to quickly parse data. **But**, it is mainly designed to handle ~<100mb. <br/> There are other tools like Spark to handle LARGE data sets (100 gigabytes to terabytes), but... #### Pandas does an amazing job at cleaning messy / real data. --- ## How does one handle Large data with Pandas When you have a gigabyte of real world data and you want to: * Explore it * Use your laptop and * You don't want to switch to Spark. <br/> ### Step One: Use old programming tricks like set numbers to ***int8***, ***floats16***, or ***float32*** etc to reduce the memory size of your DataFrame. --- ## Second Step: Use Categories If you have strings that ***repeat*** SWITCH to ### categories <br/> ### String Examples #### Non-repeating strings ```Python string_list = ['Hello', 'World', 'More Strings', 'Evelyn','Boettcher'] ``` #### Repeating String Many times string data will be repetitive, like days of week. ```Python val_days = ['Monday', 'Tuesday', 'Monday', 'Wednesday', 'Monday', 'Thursday', 'Friday', 'Saturday', 'Monday', 'Monday', 'Sunday'] ``` --- ## Data Memory footprint (sans overhead) #### Numbers |memory usage | int| uint | float | bool | complex | |:---: |:----|:---- |:---- |:---- |:---- | |1 bytes | int8 (-128-127) | uint8 (0-255)| | bool | | |2 bytes | int16 (-32768 to 32767) | uint16 (0 to 65535) |float16 (Half precision)| | | |4 bytes | int32 | uint32 | float32 (Single precision) | | | |8 bytes |f int64 | uint64 |float64 | | complex64 (rep. by 2 32-bit floats) | #### Strings Python uses three kinds of internal representations for Unicode strings: * 1 byte per char (Latin-1 encoding) * 2 bytes per char (UCS-2 encoding) * 4 bytes per char (UCS-4 encoding) --- ## How does Panda's categories work? Pandas category type uses integer values to map to the raw values in a column. <br/> This mapping is useful whenever a column contains a limited set of values. So instead of writing #### df.mydays = ["Sunday", "Sunday", "Sunday"] <br/> Pandas categories says #### Sunday = 1 and the DataFrame **in memory** is effectively now #### df.mydays = [1, 1, 1] --- ## Convert Data to Categories To convert a column of data to the category we set the data type (dtype). ```Python df['column name'].astype('category') ```  ### But wait.. Converting to categories is not always helpful. <br/> The following examples will show the power and pitfalls of categories --- ## Examples The following scripts are located here [Pandas Scripts](https://github.com/ejboettcher/evelyn_j_boettcher_cv/tree/main/_pandas/scripts) You can run these scripts and see for yourself. The scripts build the DF and then measures the size before and after conversion to categories --- ## Big Data: Numbers Only * Script: int_floats_cats.py <br/> ```bash Building three DF with: Range of numbers 1- 4 Length of DF 10_000 Top 10 rows of data INT INT8 FLOAT 1 2 4 3.329446 2 2 4 1.478631 3 2 3 1.338759 4 3 1 1.846993 5 1 2 2.787727 6 4 3 1.116634 7 1 2 1.064875 8 3 3 3.146007 9 3 2 2.201730 Size of the DF we just made INT 0.0764MB INT8 0.0096MB FLOAT 0.0764MB ____________________ As categories (plain df, category, SAVINGS): INT df ---> 0.0764MB , 0.0098MB , 87 % INT8 df ---> 0.0096MB , 0.0098MB , -2 % ___NOTE______NOTE______NOTE______NOTE___ Categories reduced the size INT df!! BUT because of the overhead it did not reduce the int8 size Now, lets try this with random Floats Float ---> 0.0764MB Float category---> 0.4079MB Categories only made the DF memory use worse All Done ``` --- ## Large Data: Numbers cont.. ### Increase SIZE ```bash python int_floats_cats.py -r 4 -n 1_000_000 ``` ```Bash Building three DF with: range of numbers 1 - 4 length of DF 1_000_000 Top 10 rows of data INT INT8 FLOAT 1 2 2 2.684429 2 2 4 1.696423 3 1 2 2.607256 4 3 1 1.247054 5 4 3 2.413925 6 2 3 3.141839 7 1 1 3.438698 8 2 2 3.584329 9 1 3 1.019402 Getting size of the DF we just made INT 7.6295MB INT8 0.9538MB FLOAT 7.6295MB ____________________ As categories (plain df, category, SAVINGS) INT df ---> 7.6295MB , 0.9539MB , 87 % INT8 df ---> 0.9538MB , 0.9539MB , 0 % ___NOTE______NOTE______NOTE______NOTE___ Categories reduced the size INT df!! BUT because of the overhead it did not reduce the int8 size Now, lets try this with random Floats Float ---> 7.6295MB Float category---> 51.4442MB Categories only made the DF memory use worse All Done ``` --- ## Large Data: Strings So lets see how we can reduce size of STRINGS arrays. If you have a column of strings that repeats, says days of week, states etc, then you might save memory if you switch to columns ```bash python strings_cat.py ``` ```Bash Building three DF with: length of random string in a row 1 - 4 length of DF 10_000 Top 10 rows of data Days HELLO Locations Days_c HELLO_c Locations_c Random_String 1 Tuesday World Beavercreek Tuesday World Beavercreek xhka 2 Wednesday Hello Oakwood Wednesday Hello Oakwood wiwh 3 Thursday World Fairfield Thursday World Fairfield gahf 4 Friday Hello Huber Heights Friday Hello Huber Heights ldou 5 Saturday World Riverdale Saturday World Riverdale rynl 6 Sunday Hello Dayton Sunday Hello Dayton oasv 7 Monday World Beavercreek Monday World Beavercreek nuym 8 Tuesday Hello Oakwood Tuesday Hello Oakwood gjvs 9 Wednesday World Fairfield Wednesday World Fairfield nmwn Getting size of the DF we just made String NO Categories 2.64MB String WITH Categories 0.0306MB Random String (1 column) 0.5MB ____________________ As categories String Columns: HELLO, Locations, Days (plain df, category, SAVINGS) NO CAT to category ---> 2.67MB , 0.03MB , 98 % Cat df to category ---> 0.0306MB , 0.0306MB , 0 % ___NOTE______NOTE______NOTE______NOTE___ Now, lets try this with random STRINGS String: Random ---> 0.5818MB String: Random category---> 0.9068MB Categories only made the DF memory use worse . ``` --- ## Large Data: STRING #### Now, lets make this BIGGGG * 1 million rows * 4 chars long in each cell ```bash python strings_cat.py -n 1_000_000 -r 4 ``` ```Bash Building three DF with: length of random string in a row 1 - 4 length of DF 1_000_000 Top 10 rows of data Days HELLO Locations Days_c HELLO_c Locations_c Random_String Tuesday World Beavercreek Tuesday World Beavercreek qbzd Wednesday Hello Oakwood Wednesday Hello Oakwood wixv Thursday World Fairfield Thursday World Fairfield vwjs Friday Hello Huber Heights Friday Hello Huber Heights xiiz Saturday World Riverdale Saturday World Riverdale owon Sunday Hello Dayton Sunday Hello Dayton jihl Monday World Beavercreek Monday World Beavercreek xwon Tuesday Hello Oakwood Tuesday Hello Oakwood zchj Wednesday World Fairfield Wednesday World Fairfield hnjt Size of the DF we just made String NO Categories 267.2MB String WITH Categories 2.8MB Random String (1 column) 58.17MB ____________________ As categories String Columns: HELLO, Locations, Days (plain df, category, SAVINGS) NO CAT to category ---> 267.2MB , 2.8MB , 98 % Cat df to category ---> 2.8MB , 2.8MB , 0 % ___NOTE______NOTE______NOTE______NOTE___ Now, lets try this with random STRINGS String: Random ---> 58.1MB String: Random category---> 47.3MB ____________________________________________________ WHAT....... There was an improvement!!! HOW DID THAT HAPPEN???? ____________________________________________________ . ``` #### There was an improvement!!! HOW DID THAT HAPPEN???? --- #### Interesting Fact: When we have a random string of characters of length 4 (26 char in the alphabet) <br/> (e.g. 26 x 26 x 26 x 26 = 456,976) <br/> Therefor over 1/2 of the strings should repeat! --- ## Larger Data: strings cont. ```bash python strings_cat.py -n 10_000_000 -s 1 -r 6 ``` This should produce a csv file called <br/> * *my_awesome.csv* with a size of 550.6MB and * *my_awesome_cat.csv* with size of 486.2 (No random str) <br/> --- ```bash Starting Building three DF with: length of random string in a row 1 - 6 length of DF 10_000_000 Top 10 rows of data Days HELLO ... Locations_c Random_String 1 Tuesday World ... Beavercreek ahlcks 2 Wednesday Hello ... Oakwood rwccxh 3 Thursday World ... Fairfield ihyieo 4 Friday Hello ... Huber Heights rtxevt 5 Saturday World ... Riverdale whpjhe 6 Sunday Hello ... Dayton vktted 7 Monday World ... Beavercreek klajfi 8 Tuesday Hello ... Oakwood eneums 9 Wednesday World ... Fairfield jvruya [9 rows x 7 columns] Size of the DF we just made String NO Categories 2672.3MB String WITH Categories 28.6MB Random String (1 column) 600.8MB ____________________ As categories String Columns: HELLO, Locations, Days (plain df, category, SAVINGS) NO CAT to category ---> 2672.8MB , 28.6122MB , 98 % Cat df to category ---> 28.6MB , 28.6123MB , 0 % ___NOTE______NOTE______NOTE______NOTE___ Now, lets try this with random STRINGS String: Random ---> 600.8MB String: Random category---> 949.3MB Categories only made the DF memory use worse . ``` --- ## Read in... `my_awesome_cat.csv` (Files size: ~486MB) </br> * Same data as my_awesome.csv, but **without** the column of random strings #### WOW The data in memory is **LESS** than the file size, by almost 90%! ```Bash USING CATEGORIES for columns that make sense. __________________________________________________ It took this many seconds to read in the csv file 7.85 __________________________________________________ Now lets look at size Memory of Df is: 57.2MB Top 4 rows of data HELLO Locations Days HELLO_c Locations_c Days_c 1 World Beavercreek Tuesday World Beavercreek Tuesday 2 Hello Oakwood Wednesday Hello Oakwood Wednesday 3 World Fairfield Thursday World Fairfield Thursday 4 Hello Huber Heights Friday Hello Huber Heights Friday 13 Sunday 20 Sunday 27 Sunday Name: Days, dtype: category Categories (7, object): [Friday, Monday, Saturday, Sunday, Thursday, Tuesday, Wednesday] __________________________________________________ ``` --- --- ## Conclusion * Pandas can handle data that most *GUI* readers (Office Libre, Excel) can not read in. * We reduced the size of data in working memory to LESS than the file size at rest (sometimes)! * Reduction can be as large as 90% * Categories can be **Helpful** or **Hurtful** when we are dealing with large data. * Please use Pandas with care <br> --- ## Questions ### Prayer to PyLint Code, don't fail again <br> CI in the cloud please take <br> pylint be my friend <br> ### Link to Repo [https://github.com/ejboettcher/evelyn_j_boettcher_cv/tree/main/_pandas](https://github.com/ejboettcher/evelyn_j_boettcher_cv/tree/main/_pandas) --- ## References * https://www.dataquest.io/blog/pandas-big-data/ * https://pandas.pydata.org/ * https://jakevdp.github.io/PythonDataScienceHandbook/02.01-understanding-data-types.html ### Slides These slides are written in Markdown and rendered in to HTML via the Jekyll Framework using RevealJS. It is uses, with modification, the following sites. * [RevealJS](https://revealjs.com/) * [RevealJS in Jekyll Framework](https://github.com/dploeger/jekyll-revealjs)